Design Chat Messenger App

Definition

A Software application to provide instant text based messaging services to users (from mobile or wireless)

Requirements

Functional Requirement

- Support 1-1 converation with users

- Keep track of offline/online users

- Support persistent storage (chat history)

Non-Functional Requirement

- Low latency : System should provide real time chat experience to users

- Consistency : System should provide same message to users in all devices

- Available : System must be highly avialable

- Reliable : System should not lose chat/user data

Capacity Management

Users

Assumptions:

- Daily users : 500 M

- Each user send 40 messages per day

- Total msg per day = 500M * 40 = 20 Billion msg per day

- Total space = 20 B * 100 bytes (each message size) = 2 TB per day

Chat History

Assumptions:

- 5 years of data storage

- 2 TB * 365 days * 5 years ~= 3.6 PB [Assumption : Data is not replicated , not compressed]

Bandwidth estimation

Assumptions:

- Incoming data for each second = 2 TB (total data per day)/ 86400 sec ~= 25 MB/s

- Bandwidth 25MB/s is required for both upload and download.

High level Design

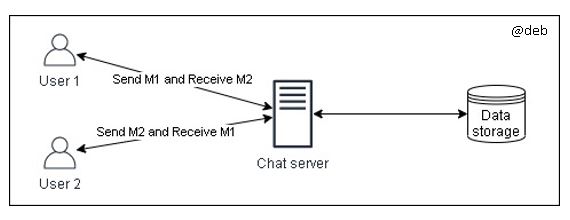

High level steps:

- User-A sends a message to User-B through the chat server.

- The server receives the message and sends an acknowledgment to User-A.

- The server stores the message in its database and sends the message to User-B.

- User-B receives the message and sends the acknowledgment to the server. 5. The server notifies User-A that the message has been delivered successfully to User-B.

Simple component design

- Receive incoming messages and deliver outgoing messages.

- Store and retrieve messages from the database.

- Keep a record of which user is online or has gone offline, and notify all the relevant users about these status changes.

Message Handling

- Pull model : Users can periodically ask the server if there are any new messages for them.

- Push model : Users can keep a connection open with the server and can depend upon the server to notify them whenever there are new messages.

We chose second approach, where all the active users keep a connection open with the server, then as soon as the server receives a message it can immediately pass the message to the intended user. This way, the server does not need to keep track of the pending messages, and we will have minimum latency, as the messages are delivered instantly on the opened connection.

A few questions

-

How to keep open connection ? : Use HTTP Long polling technique

-

How to keep track of opened connection : Use hash table, where “key”-> UserID and “value” -> COnnection object So whenever the server receives a message for a user, it looks up that user in the hash table to find the connection object and sends the message on the open request

-

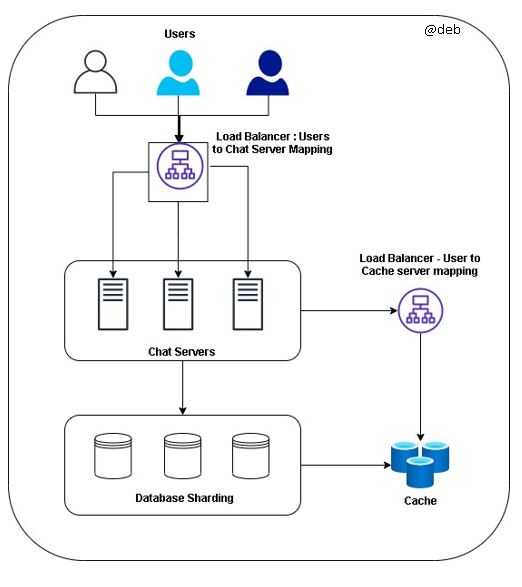

Estimate number of Servers : Assume 500 million connections at any time. Assuming a modern server can handle 50K concurrent connections at any time, we need 10,000 such servers.

-

Which server holds the connection to which user : Introduce a software load balancer in front of chat servers which can map each UserID to a server to redirect the request

Sequencing of Messages

- User 1 (sends msg M1 to U2) ——> Server ——> User 2 ( msg M1 recieved at T1)

Mewanhile,

- User 2 (sends msg M2 to U1) ——> Server ——> User 1 (recieves msg M1 to U2)

Now , server checks T2 > T1 and then server sends M1 to U2 and M2 to U1

There is a problem (Consistency)

- In this way , U1 sees M1 and then M2. But U2 sees M2 and then M1

Solution We need to keep sequence number so as users can see the same sequnce of messages across all users and devices

Storing and retrieving the messages from the database

2 Options

- Start Multi-threading process

- Start asynchronous request to database to store messages (efficient way)

Read more about asynchronous from here

Designing the Database

Criterias to choose the database :

- Database with high rate if small updates

- Fetch records quickly

- Low latency

- Handle retry failed request and log them

- Retry the loggged request

Application Flow Design

Read about cache from here

Data Processing Design

Data Partition

- Partition data based on UserID and MessageID

- User message is ideal to put in less shards . More number of shards will result in slow performance

Load Balancer

Read about load balancer from here

Need Load balabncer in front of :

- Chat server (Map each user ID to a server that holds connection to user and then direct request to server)

- Cache server

Fault replication

Reed-Solomon encoding to distribute and replicate data

More readings

Encourage to subscribe & go through course in more details from Educative.io - here