Design Photo Sharing App

Definition

A Software application to provide a platform to upload , view and search photos. Additionally, follow and search users. Platform must provide seamless experience to users in all devices.

Requirements

Functional Requirement

- System must allow users to Upload and view photos

- System must allow users can follow users

- Users can Search user and photos

Non-Functional Requirement

- Low latency : System should provide near real time feed generation (~200ms)

- Consistency : System should provide same message to users in all devices

- Available : System must be highly avialable

- Reliable : System should not lose photos/user data

Capacity Management

- Total users : 500 M

- Active users : 1 M (daily)

- Avg photo size : 200 KB

- Say 2M photos uploaded daily , then total space (photos) per day = 2M * 200 = 400G B per day

Total space (photos) in 10 years = 400 GB * 10 years * 365 days per year = 1425 TB

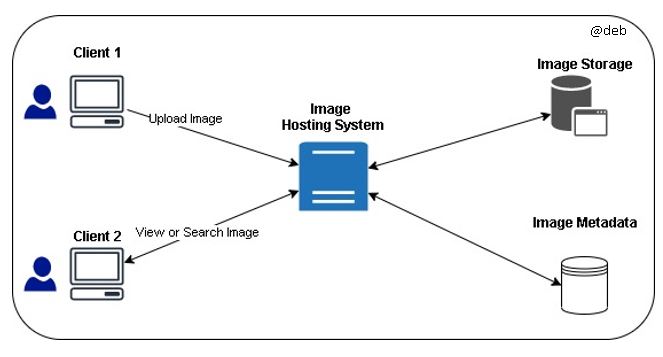

High level Design

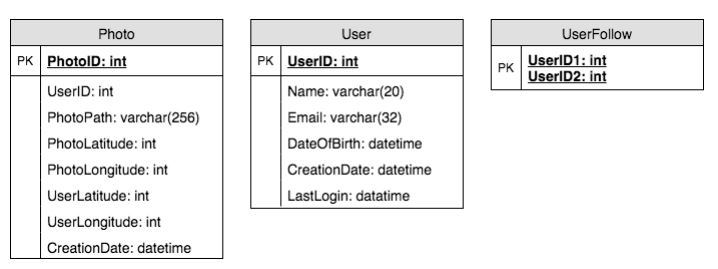

Database Schema

Data Size estimate

- Calculate for 10 years

- Data Compression and Replication are out of scope

Users space

-

UserID (4 bytes) + Name (20 bytes) + Email (32 bytes) + DateOfBirth (4 bytes) + CreationDate (4bytes) + LastLogin (4 bytes) = 68 bytes

-

For 500 million * 68 ~= 32 GB Photo

Photos space

-

PhotoID (4 bytes) + UserID (4 bytes) + PhotoPath (256 bytes) + PhotoLatitude (4 bytes) + PhotLongitude(4 bytes) + UserLatitude (4 bytes) + UserLongitude (4 bytes) + CreationDate (4 bytes) = 284 bytes

-

Say 2M photos upload per day * 284 bytes ~= 0.5 GB per day

-

For 10 years * 365 * 0.5 GB = 1.88 TB of storage.

User Follows space

- For 500 million users * 500 followers * 8 bytes ~= 1.82 TB

Total space required for all tables for 10 years

Total space

= Users space + Photos space + User follows

= 32GB + 1.88TB + 1.82TB ~= 3.7 TB

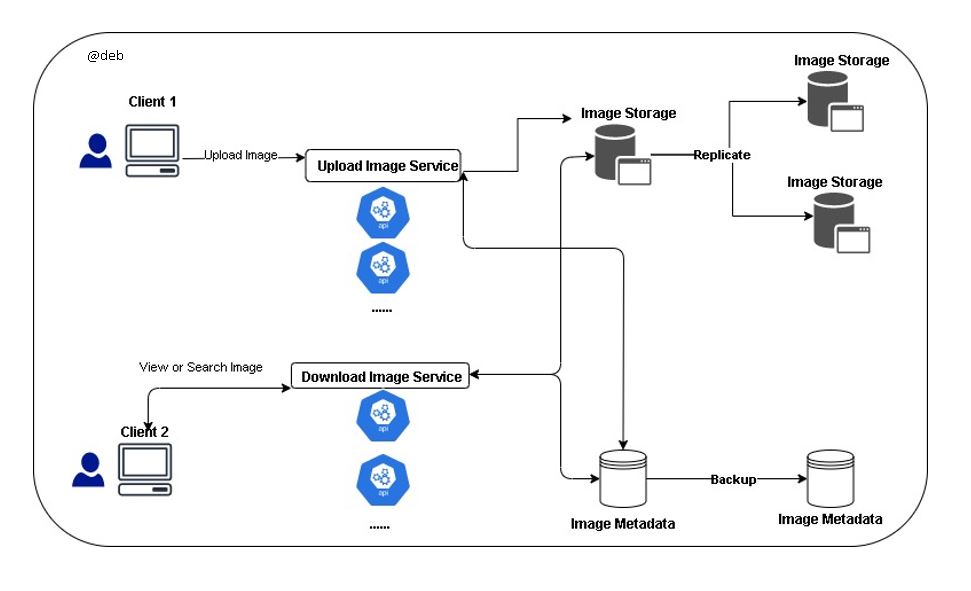

Component Design

- Assume web server has max 500 connection

- This means 500 concurrent connecion (uploads or reads)

- Separate and dedicated reads & uploads servers (So as uploads dont hog system and improve scaling)

- Multiple copies of files to be stored

- Multiple replicas of servers running (removes redundany)

Data Sharding

- Partitioning based on UserID

- Assume 1 shard has 1 TB , with 3.7 TB - we would require 4 shards

A few Questions

- How do we handle influencers or people with many followers ?

- How do we handle people who post more post than others ?

- How do we handle sitaution where users photo are not in 1 shard ?

- In case , lost of 1 shard - users might experience availability issue ?

Possible solution : If we can generate unique PhotoID first and then find shard number through “PhotoID % 10”, the above problems might be solved (partially)

Generate Photo IDs

- Dedicate a separate database instance to generate auto-incrementing IDs.

- Assume PhotoID fits into 64 bits, we can define a table containing only a 64 bit ID field.

- Next, whenever we add a photo in our system, we can insert a new row in this table and take that ID to be our PhotoID of the new photo

In order to avoid single point of failure ,

- We define with one generating even numbered IDs and the other odd numbered.

- Place load balancer in front of both of these databases to round robin between them and to deal with downtime

Cache & Load Balancer

Read about load balancer from here

Need to have :

- Photo Cache server

- CDN [Content Delivery Network] (For globally distributed users)

- Cache for Metadata users [LRU] Read more about cache from here

Apply 80-20 rule

It means 20% of daily read volume for photos is generating 80% of traffic which means that certain photos are so popular that the majority of people read them. This dictates that we can try to cache 20% of daily read volume of photos and metadata.

More readings

Encourage to subscribe & go through course in more details from Educative.io - here